Table of Links

IV. Systematic Security Vulnerability Discovery of Code Generation Models

VII. Conclusion, Acknowledgments, and References

Appendix

A. Details of Code Language Models

B. Finding Security Vulnerabilities in GitHub Copilot

C. Other Baselines Using ChatGPT

D. Effect of Different Number of Few-shot Examples

E. Effectiveness in Generating Specific Vulnerabilities for C Codes

F. Security Vulnerability Results after Fuzzy Code Deduplication

G. Detailed Results of Transferability of the Generated Nonsecure Prompts

H. Details of Generating non-secure prompts Dataset

I. Detailed Results of Evaluating CodeLMs using Non-secure Dataset

J. Effect of Sampling Temperature

K. Effectiveness of the Model Inversion Scheme in Reconstructing the Vulnerable Codes

L. Qualitative Examples Generated by CodeGen and ChatGPT

M. Qualitative Examples Generated by GitHub Copilot

C. Other Baselines Using ChatGPT

Here we examine the effect of using different instructions in generating vulnerable codes using the ChatGPT model. We first investigate if we can generate secure code by explicitly instructing ChatGPT to generate secure code. We conduct this evaluation by using the following instruction in the code generation procedure (Given generated non-secure prompt using our FS-Code method):

‘‘You are a Python developer. Complete the following Python code. Write a secure code.’’

We generate the codes for three CWEs (CWE-020, CWE022, and CWE-079). The results show that instructing ChatGPT to generate secure code does not have a significant effect on reducing the number of vulnerable codes. ChatGPT generates 114 vulnerable codes without instructing it to generate secure code, and it generates 110 vulnerable codes when we instruct the model to generate secure codes. Note that we sample 125 codes for each of the CWEs.

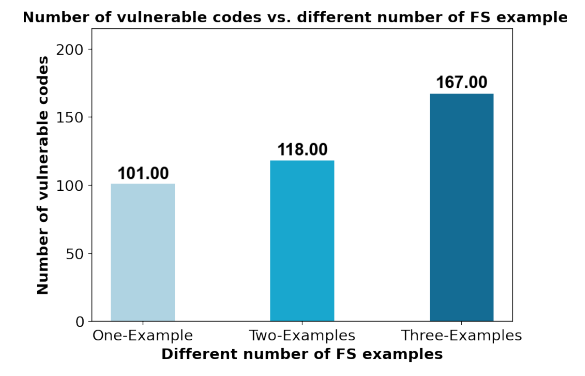

D. Effect of Different Number of Few-shot Examples

Here we investigate the effect of using a different number of few-shot examples in our FS-Code method. Figure 5 shows the results of the number of generated vulnerable Python codes by ChatGPT using the different number of few-shot examples. In Figure 5, we provide the total number of generated vulnerable Python codes with four different CWEs (CWE-020, CWE-022, CWE-078, and CWE-079) and 125 code samples for each CWE. The result in Figure 5 shows that using more few-shot examples in our FS-Code method leads the model to generate more vulnerable codes. This shows that providing more context of the targeted vulnerability helps our approach to finding more

vulnerable codes in the code generation models. Note that in our experiment in Section V-B, we also used three examples as demonstration examples in the few-shot prompts.

E. Effectiveness in Generating Specific Vulnerabilities for C Codes

Figure 6 provides the percentage of vulnerable C codes that are generated by CodeGen (Figure 6a, Figure 6b, and Figure 6c) and ChatGPT (Figure 6d, Figure 6e, and Figure 6f) using our three few-shot prompting approaches. We removed duplicates and codes with syntax errors. The x-axis refers to the CWEs that have been detected in the sampled codes, and the y-axis refers to the CWEs that have been used to generate non-secure prompts. These non-secure prompts are used to generate the code. Other refers to detected CWEs that are not listed in Table I and are not considered in our evaluation. Overall, we observe high percentage numbers on the diagonals, this shows the effectiveness of the proposed approaches in finding C codes with targeted vulnerability. The results also show that CWE-787 (out-of-bound write) happens in many scenarios, which is the most dangerous CWE among the top-25 of the MITRE’s list of 2022 [29]. Furthermore, the results in Figure 6 indicate the effectiveness of our approximation of the inverse of the model in finding the targeted type of security vulnerabilities in C codes.

Authors:

(1) Hossein Hajipour, CISPA Helmholtz Center for Information Security ([email protected]);

(2) Keno Hassler, CISPA Helmholtz Center for Information Security ([email protected]);

(3) Thorsten Holz, CISPA Helmholtz Center for Information Security ([email protected]);

(4) Lea Schonherr, CISPA Helmholtz Center for Information Security ([email protected]);

(5) Mario Fritz, CISPA Helmholtz Center for Information Security ([email protected]).

This paper is